Australian Businesses Under Siege By AI Enabled Cyber Attacks: 23% Rise In One Year

As the AI industry grows and changes, new risks are appearing. These risks could cost the Australian government and local businesses millions of dollars. This report explains key findings from government sources, the challenges AI creates for businesses, and practical solutions to reduce these threats.

Key Findings

The amount of AI-made (synthetic) content online is on the rise:

Experts predict that by 2027, up to 90% of online material could be at least partly created by AI. This content is becoming so realistic that it is almost impossible to tell apart from real content. Because of this, tools like Content Credentials, which confirm where content comes from, will be essential to protect the online information space. 1

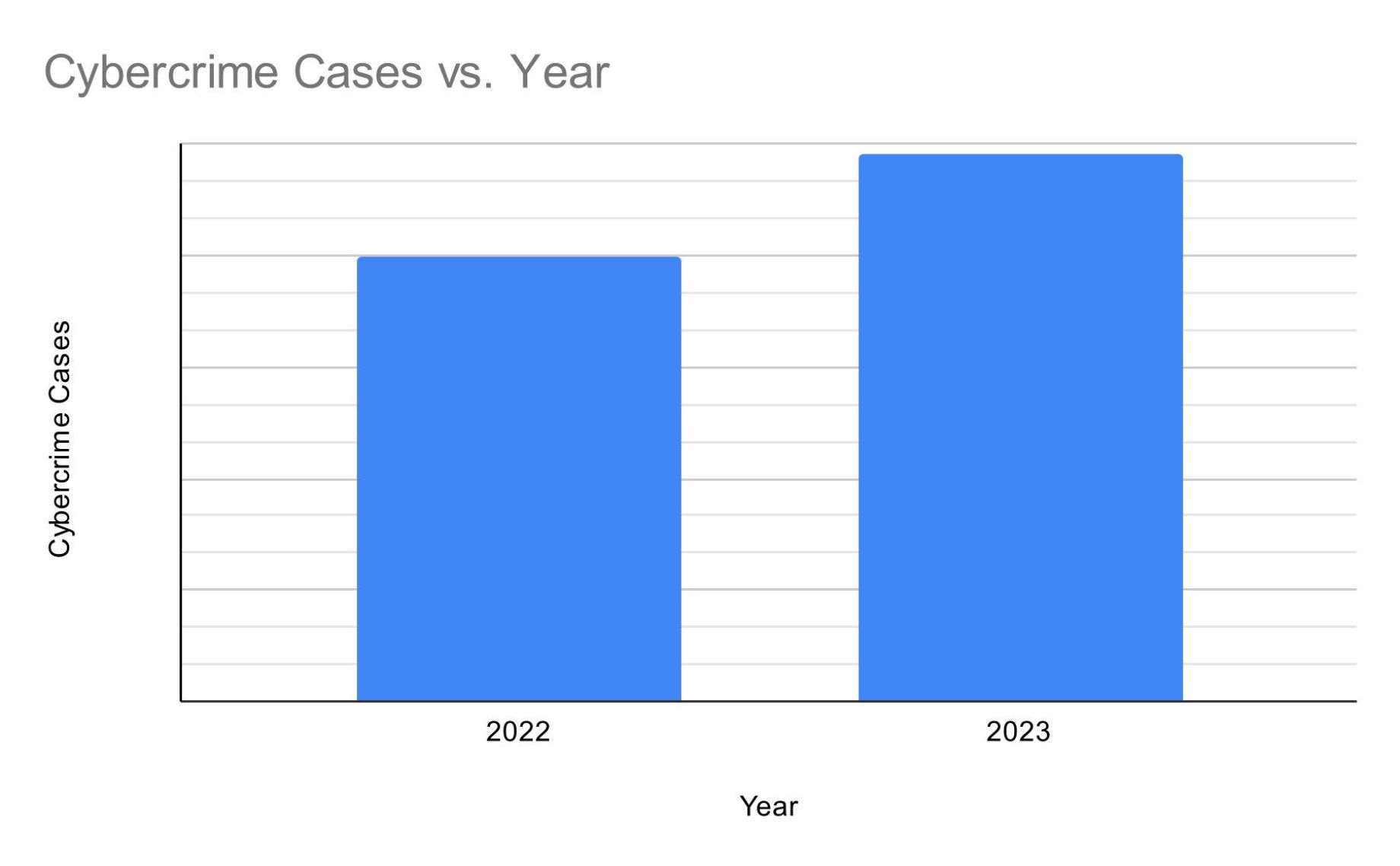

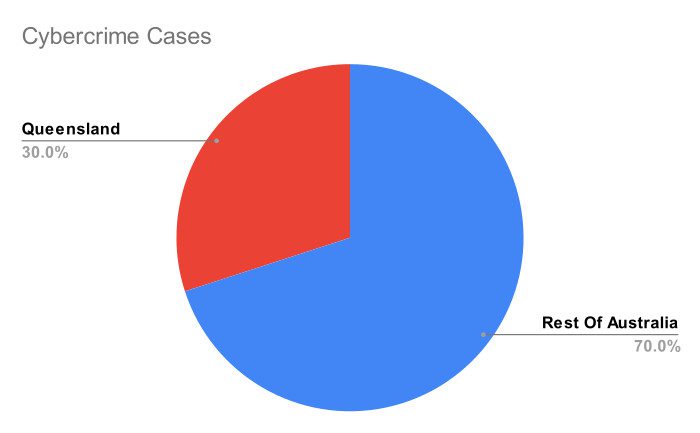

Almost 94,000 cybercrime cases were reported to the ACSC in 2022–23:

That’s 23% more than the year before. Queensland made up 30% of these reports, which is a lot compared to how many people live there. Also, about 1 in every 8 reports came from state or local government groups.2

Businesses and individuals reported almost $84 million in losses:

More than 1,400 reports involved financial losses, with the average loss per case exceeding $55,000. Queensland recorded the highest number of confirmed cases, with 434 reports.3

Maturity Levels Down:

An ASD survey shows that few organisations have reached Maturity Level 2 across all eight mitigation strategies. In 2024, only 15% achieved this level, down from 25% in 2023.4

Key Problems

The misuse of AI-generated media poses a serious cyber threat to organisations:

This includes impersonating executives and sending fraudulent messages to gain access to networks and sensitive data. At the same time, public trust in organisational media is rapidly declining, making transparency essential to maintaining credibility.5

Possible Solution: Content Credentials

While technologies like watermarking can assist with media provenance, Content Credentials are an emerging standard that greatly improve transparency. They outline how software and hardware involved in creating, editing, and distributing media should record, verify, and manage provenance information. Implementing Content Credentials enables users to make more informed decisions about the media they consume.6

Data poisoning & Adversarial inputs:

AI models are trained on large datasets, and the quality of this data directly impacts performance. If a malicious actor tampers with the training data, the AI can be manipulated into making flawed or incorrect decisions.

Once an AI system is deployed, malicious actors may provide carefully designed inputs or prompts that cause the system to make errors, such as producing sensitive or harmful content.7

Possible Solution: Anomaly detection & Essential Eight

Apply anomaly detection algorithms during data pre-processing to identify and filter out suspicious or malicious data points before training. These algorithms detect unusual patterns in the dataset, helping to isolate and remove poisoned inputs.8

The ASD also developed the Strategies to Mitigate Cyber Security Incidents to help organisations protect their IT networks from cyber threats. The most important of these are the Essential Eight. The Essential Eight Maturity Model guides their implementation, drawing on ASD’s experience in cyber threat intelligence, incident response, penetration testing, and supporting organisations in applying the Essential Eight.9

Hallucination:

AI systems can produce incorrect results, including false positives, false negatives, or fabricated information such as references that do not exist.6

Possible Solution: Human Oversight And Control

When deploying AI, organisations must ensure strong human oversight and control. Risk assessments should be carried out throughout the entire lifecycle of an AI product, carefully weighing potential benefits against possible harms. Operators must also consider the broader environment in which AI systems function, including any connected systems, to fully understand associated risks. For critical operations, reliance solely on AI-generated outputs should be avoided, with human review remaining essential in decision-making.

Under the SOCI Act, owners, operators, and direct interest holders of critical infrastructure assets are required to maintain a Critical Infrastructure Risk Management Program (CIRMP), which includes cyber and information security risks. While entities have discretion in how they manage hazards, they are strongly encouraged to adopt AI-specific risk frameworks, such as ISO/IEC 42001:2023 or the NIST AI Risk Management Framework.10

Privacy concerns:

Personal data is often anonymised to protect individuals’ identities, and when done properly, re-identification should be highly difficult. However, with the rise of AI, there are growing concerns that malicious actors could use advanced techniques to re-identify individuals within large anonymised datasets, undermining privacy protections.11

Possible Solution: Data Anonymisation & Strong Cyber Security Hygiene

Apply anonymisation techniques to protect sensitive data attributes, ensuring confidentiality while still enabling AI models to identify patterns and produce accurate predictions.12

AI systems are more vulnerable if an organisation’s overall cyber security posture is weak. Implementing and maintaining robust, organisation-wide measures, such as strong encryption, least-privilege access, strict password policies, and multi-factor authentication can significantly reduce the attack surface, including risks to AI systems, sensitive data, and critical services. It is equally important to ensure employees adhere to established cyber security policies and updated frameworks when adopting AI or other emerging technologies.13

Statistical Bias:

AI systems can develop bias when flaws or imbalances exist in the training data, leading to skewed or inaccurate results. Issues such as sampling bias or biased data collection can negatively impact performance and outcomes. If not addressed, statistical bias reduces both the accuracy and effectiveness of AI systems.14

Possible Solution: Mitigation Strategies

- Regular training data audits: Conduct frequent reviews of training datasets to detect, assess, and remediate issues that could lead to systematic inaccuracies.

- Representative training data: Ensure datasets are comprehensive and representative of the full range of relevant information. Properly separate data into training, development, and evaluation sets to accurately measure statistical bias and overall performance.

- Edge case management: Identify and address edge cases that may cause models to behave unexpectedly or produce errors.

- Bias testing and correction: Maintain a repository of observed biased outputs and use this evidence to strengthen training data audits and apply reinforcement learning to reduce measurable bias.15

Frequent And Severe Cyber Threats

AI technologies are expected to increase both the effectiveness and efficiency of cyber intrusion operations, potentially leading to more frequent and severe cyber threats. AI can be applied to automate, enhance, plan, or scale physical attacks, such as those involving autonomous systems like drones, as well as cyber compromises of networks and systems. It also lowers the technical barriers for conducting offensive cyber operations, including malware development and reconnaissance. In the case of phishing, generative AI can be used to craft highly realistic and personalised emails, text messages, or social media messages, scrape and analyse large volumes of personal data, and automate the delivery of malicious content. This use of AI makes phishing campaigns significantly more effective and harder to detect.16

Possible Solution: Preparedness And Response

Organisations must be ready to act when risks materialise. Continuous logging and monitoring of AI system inputs and outputs is essential to detect anomalies or potential malicious activity. This requires establishing a clear baseline of normal system behaviour to accurately identify deviations. A well-defined response plan should be developed in advance, outlining how incidents or errors may impact operations and detailing contingency measures to maintain business continuity. Under the SOCI Act, critical infrastructure owners and operators are required to report cyber security incidents with relevant or significant impacts, including those involving AI.17

Limited AI Regulations

AI technologies are advancing rapidly and have wide-ranging impacts, making it essential for organisations to carefully assess their cybersecurity implications. This includes evaluating both the benefits and risks within the organisation’s specific context. With limited regulations currently in place, organisations must take a cautious approach to managing AI-related risks.18

Possible Solution: Using A Trusted AI Vendor

When procuring AI systems, organisations must ensure the credibility of vendors and confirm that the systems originate from jurisdictions with strong legislation, oversight, and adherence to responsible and ethical AI principles. This includes assessing the broader supply chain supporting the AI system and evaluating potential Foreign Ownership, Control, or Influence (FOCI) risks, particularly those arising from the relevant jurisdiction’s transparency and privacy protections. Organisations should also consider applicable data collection laws, contractual terms of use, and the possibility of foreign entities compelling disclosure of sensitive information. Privacy policies must be carefully reviewed, with close attention to provisions relating to the collection, use, and disclosure of personal or sensitive data, including for system training. Under the SOCI Act, entities are required to establish and maintain processes to minimise, mitigate, or eliminate supply chain risks.19

Limited Experience In Managing AI:

Compromise of AI systems that perform or support mission-critical functions presents the potential for immediate and severe consequences. Integrating AI into existing infrastructures expands the cyber-attack surface, creating new avenues for malicious actors to exploit. The novelty, complexity, and limited experience in managing AI within critical infrastructure networks further heightens overall risk exposure.20

Possible Solution: Train Staff & Use Up To Date Information

Organisations must ensure they have adequate resources to securely establish, maintain, and operate AI systems. This includes identifying which staff will interact with the system, determining the knowledge required to use it securely, and providing appropriate training. Personnel should be instructed on what types of data may or may not be entered into the AI system, such as personally identifiable information or proprietary intellectual property. Staff must also be trained on the reliability of AI outputs and the organisational processes in place for validating and verifying those outputs.21

Conclusion

Significant work remains in addressing the challenges posed by AI, with the Australian government and businesses still working to keep pace with current threats. It is also highly likely that new threats will emerge, requiring the development of new solutions to effectively mitigate them.

Resources

- Content Credentials: Strengthening Multimedia Integrity in the Generative AI Era ↩︎

- Responding to and recovering from cyber attacks; Report 12: 2023–24, Page 1 ↩︎

- Annual Cyber Threat Report 2023-2024 ↩︎

- The Commonwealth Cyber Security Posture in 2024 ↩︎

- Using Content Credentials to help mitigate cyber threats associated with generative AI ↩︎

- Using Content Credentials to help mitigate cyber threats associated with generative AI ↩︎

- ASD Annual Cyber Threat Report: 2023–2024, Page 53 ↩︎

- AI Data Security: Best Practices for Securing Data Used to Train & Operate AI Systems, Page 14 ↩︎

- ASD Annual Cyber Threat Report: 2023–2024, Page 57 ↩︎

- Factsheet for Critical Infrastructure – Artificial Intelligence in Critical Infrastructure: June 2025, Page 2 ↩︎

- ASD Annual Cyber Threat Report: 2023–2024, Page 53 ↩︎

- AI Data Security: Best Practices for Securing Data Used to Train & Operate AI Systems, Page 15 ↩︎

- Factsheet for Critical Infrastructure – Artificial Intelligence in Critical Infrastructure: June 2025, Page 3 ↩︎

- AI Data Security: Best Practices for Securing Data Used to Train & Operate AI Systems, Page 16 ↩︎

- AI Data Security: Best Practices for Securing Data Used to Train & Operate AI Systems, Page 16 ↩︎

- Factsheet for Critical Infrastructure – Artificial Intelligence in Critical Infrastructure: June 2025, Page 1 ↩︎

- Factsheet for Critical Infrastructure – Artificial Intelligence in Critical Infrastructure: June 2025, Page 3 ↩︎

- Engaging with artificial intelligence ↩︎

- Factsheet for Critical Infrastructure – Artificial Intelligence in Critical Infrastructure: June 2025, Page 3 ↩︎

- Factsheet for Critical Infrastructure – Artificial Intelligence in Critical Infrastructure: June 2025, Page 2 ↩︎

- Engaging with artificial intelligence ↩︎